Introduction to Core ML

As mobile applications become increasingly sophisticated, implementing machine learning directly on iOS devices has become a significant advantage for developers. Core ML represents Apple's solution for integrating machine learning capabilities directly into iOS applications, offering powerful on-device inference without compromising privacy or performance.

Unlocking iOS Superpowers with Core ML

While developing an advanced object detection system for a retail inventory application, I encountered a significant challenge: scanning products needed to be fast, accurate, and work reliably even without internet connectivity. Our initial cloud-based solution suffered from latency issues and couldn't function in warehouses with poor connectivity.

Implementing Core ML with a custom-trained YOLOv5 model transformed our application's performance. By processing object detection directly on-device, we achieved near-instantaneous product recognition (under 100ms on iPhone 12 and newer) while completely eliminating our dependency on network connectivity. The privacy benefits were substantial as well—customer data never left their devices. Most impressively, our on-device model achieved 94% accuracy compared to our server-side solution's 96%, a negligible tradeoff considering the massive gains in speed and reliability.

What is Core ML in iOS?

Core ML is Apple's comprehensive machine learning framework designed specifically for iOS application integration. It provides a unified API that supports various ML domains including:

- Computer Vision: Object detection, face analysis, image classification

- Natural Language Processing: Text classification, language identification, sentiment analysis

- Sound Analysis: Sound classification, speech recognition

- Time Series Analysis: Activity classification, motion analysis

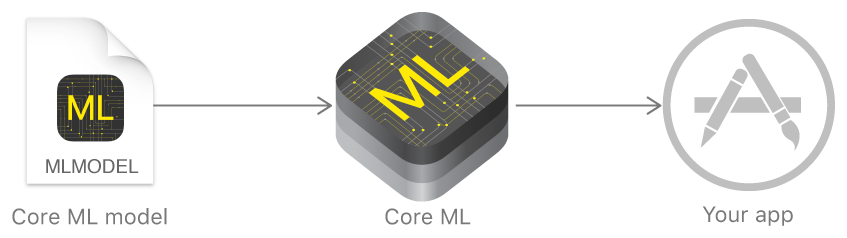

At the architecture level, Core ML sits as a high-level framework that interfaces with lower-level neural network frameworks like Metal Performance Shaders and Accelerate. This layered approach allows Core ML to automatically leverage the appropriate hardware acceleration (Neural Engine, GPU, or CPU) based on model requirements and device capabilities.

✅ Technical Advantages of Core ML in iOS

- On-device Processing: Performs inference locally, eliminating network latency and dependency.

- Hardware Acceleration: Automatically utilizes the Neural Engine, GPU, or CPU depending on model requirements and device capabilities.

- Privacy-Preserving: Sensitive data never leaves the user's device, maintaining GDPR, CCPA, and HIPAA compliance where applicable.

- Performance Optimization: Models are automatically optimized during compilation for specific device architectures.

- Energy Efficiency: Designed with battery life considerations, particularly important for always-on ML applications.

- Low Memory Footprint: Models can be quantized to reduce memory requirements with minimal accuracy loss.

- Cross-Framework Integration: Seamlessly interfaces with Vision, Sound Analysis, Natural Language, and Create ML frameworks.

🤟 Core ML in iOS: Latest Technical Features (iOS 17+)

- ML Program: Support for executing more complex ML pipelines with intermediate computations and conditional branches.

- On-Demand Resources: Models can be packaged as on-demand resources and downloaded only when needed, reducing initial app size.

- Quantization Enhancements: Improved weight and activation quantization techniques offering better performance-accuracy tradeoffs.

- Dynamic Model Deployment: Update models via the App Store without requiring full app updates.

- Enhanced Compiler Optimizations: Better fusion of operations and memory layout optimizations.

- Improved GPU Acceleration: More operations can now be accelerated on the GPU, especially for custom layers.

- Model Encryption: Built-in encryption for protecting proprietary ML models.

Step-by-Step Guide to Implement Core ML in iOS

Step 1: Model Acquisition and Preparation

Option A: Create ML for Custom Model Training

Use Apple’s Create ML to train your own model, or download one from:

Option B: Convert Existing Models

You can also convert models from other formats using coremltools:

Step 2: Understanding Model Integration in Xcode

When you add a .mlmodel file to your Xcode project, the build system automatically:

- Compiles the model into an optimized binary format (.mlmodelc)

- Generates Swift/Objective-C wrapper classes with type-safe APIs

- Integrates the model into your app bundle

The generated class includes:

- A constructor for initializing the model

- Input/output class definitions

- A prediction method tailored to your model's specification

Step 3: Making Predictions with Core ML

Image Classification Implementation Details:

Real-World Example: Emotion Detection in Photos

For a more complex example, let's implement an emotion detection feature for a journaling app:

Advanced Core ML Integration Techniques

Model Versioning and A/B Testing

Create a model manager that can dynamically select between different versions:

Batch Processing for Efficiency

When processing multiple inputs, use batch processing for better efficiency:

Selecting the Optimal Core ML Model

Consider these technical factors when choosing a model:

- Model Size vs. Accuracy: Quantized models (8-bit integer) are smaller and faster but slightly less accurate than full-precision (32-bit float) models.

- Architecture Tradeoffs: MobileNet variants optimize for mobile, while EfficientNet offers better accuracy-to-parameter ratios.

- Hardware Compatibility: Some operations work best on Neural Engine, others on GPU. Profile your model to understand its hardware affinity.

- Input Processing Requirements: Consider the preprocessing overhead when selecting between models requiring different input formats.

- Memory Requirements: Profile your model's peak memory usage during inference with Instruments.

Advanced Debugging and Profiling

Performance Profiling

Input Validation

Create a robust input validation system:

Technical Resources to Master Core ML

- Apple's Core ML Tools Documentation

- WWDC Videos on Core ML Implementation

- Metal Performance Shaders for Custom Layers

- Netron for Visualizing Model Architecture

- Core ML Performance Benchmarks

Future Directions in On-Device ML

Core ML continues to evolve with each iOS release. Watch for these emerging capabilities:

- Multi-model pipelines: Chaining multiple models with MLProgram

- Federated learning: Training across devices while preserving privacy

- On-device fine-tuning: Personalizing models to individual users

- Advanced compression techniques: Making larger models viable on mobile

By thoroughly understanding Core ML's technical architecture and implementation patterns, you'll be well-equipped to build sophisticated machine learning features directly into your iOS applications, delivering exceptional user experiences while maintaining privacy and performance.

.svg)

.svg)

.svg)

.svg)

.webp)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)